Obsidian Notes AI

I build this project to learn how to use generative AI APIs, and practice my Next.js and tRPC skills.

It's built with Next.js, tRPC and Tailwind via the create t3 template which was a very smooth experience. I am using the Google Gemini SDK and the Gemini 2.0 flash as well as text embedding models.

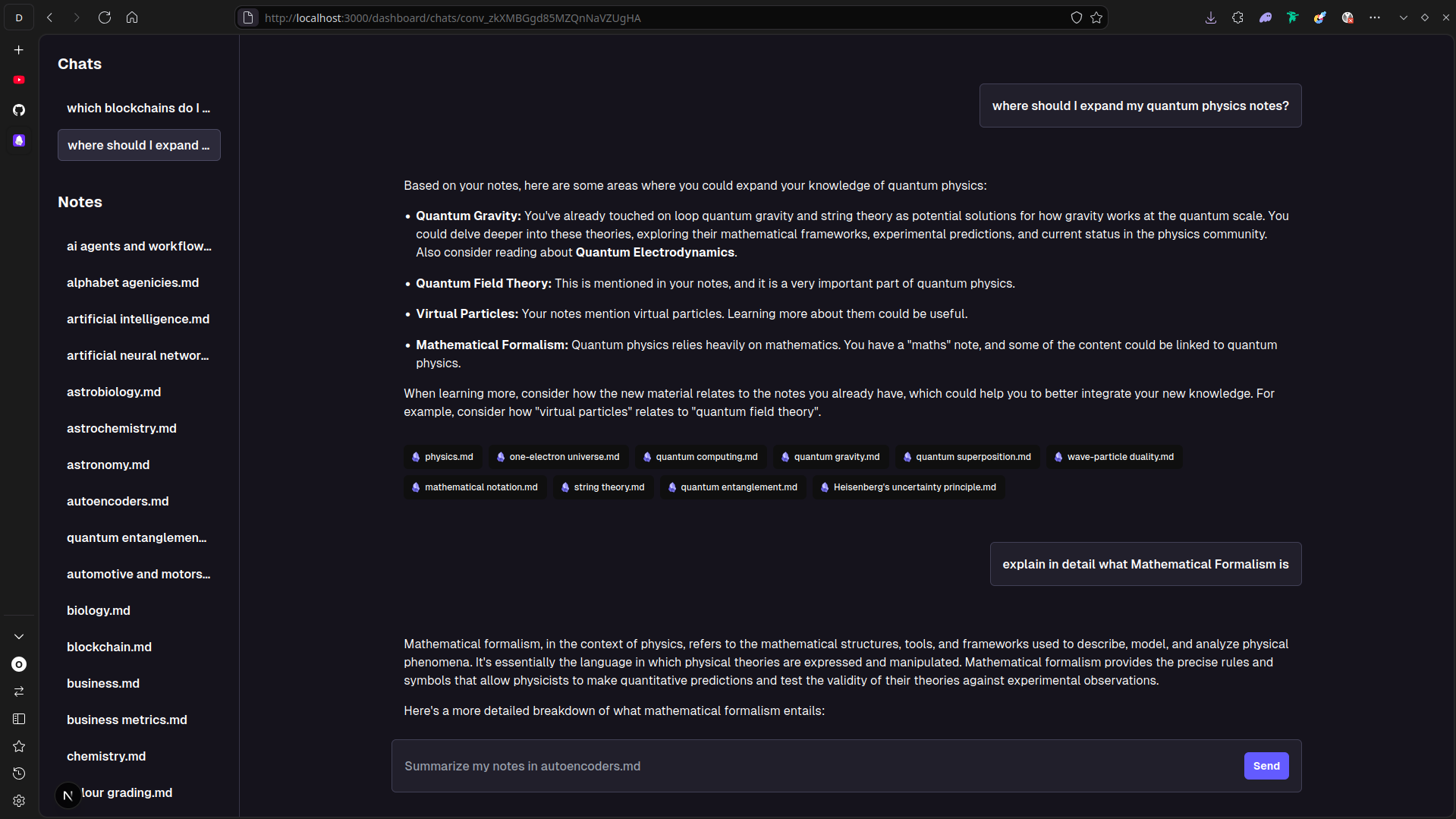

You can upload your markdown files (mine come from obsidian), and ask the LLM questions about your notes.

It'll reference your notes if it used them for it's response. Conversations are saved and chat history is applied to prompts.

Stack

Typescript

Next.js

tRPC

Supabase

Tailwind

What I learned

During this project I learned a lot about the more practical aspects of generative AI.

I knew of things like RAG and prompt engineering but this put it in am much clearer picture for me in how it's actually implemented.

I use text embeddings to find the relevant notes to provide as context to the LLM which was really interesting to learn.

I also used Next.js and tRPC for this project (opposed to my usual SvelteKit and elysia), which refreshed my knowledge (I used to main tRPC ~2 years, and nextjs/react almost 4 years ago). I always found nextjs unnecessarily tricky to work with, unlike SvelteKit, but using it in depth taught me some better practices and conventions which made the experience more enjoyable. I also forgot how great tRPC was to use. The type safety works super well and makes for a great DX.

One thing I struggled with was streaming text from the LLM, via my tRPC backend to my frontend. I am still looking for a relatively simple way to do this.